Rosie

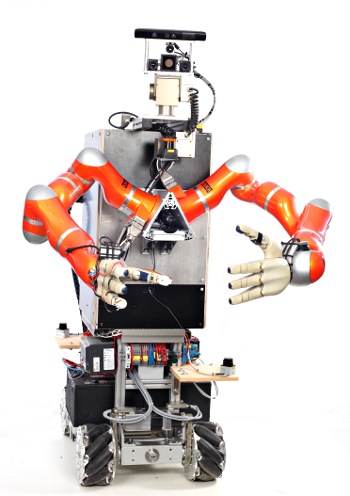

Rosie has been built around the KUKA-omnidirectional base and KUKA-lightweight LWR-4 arms. Deployed in the Assistive Kitchen environment of the Intelligent Autonomous Systems Group (http://ias.cs.tum.edu) it has been in the operation for over more than a year. The main objective of the research performed on- and around the robot is to develop a system with the very high degree of cognition involved. The latter thus envisions a system that can intelligently perceive environments, reason about them, autonomously infer best plausible actions and in the end, also successfully execute them. Following video demonstrates some of the so far developed capabilities:

Rosie has been built around the KUKA-omnidirectional base and KUKA-lightweight LWR-4 arms. Deployed in the Assistive Kitchen environment of the Intelligent Autonomous Systems Group (http://ias.cs.tum.edu) it has been in the operation for over more than a year. The main objective of the research performed on- and around the robot is to develop a system with the very high degree of cognition involved. The latter thus envisions a system that can intelligently perceive environments, reason about them, autonomously infer best plausible actions and in the end, also successfully execute them. Following video demonstrates some of the so far developed capabilities:

Technically the robot consists of the following components:

Hardware

Actuators

- mecanum-wheeled omnidirectional platform,

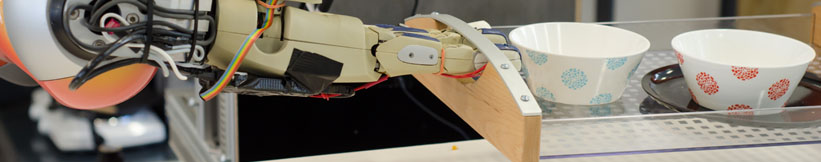

- two KUKA-lightweight LWR-4 arms (7 DOF) mounted on this platform. they have torque sensors in the joints and provide impedance control using these sensors,

- two four-fingered DLR-HIT hands mounted on the arms with torque sensors and joint impedance control as well.

- a Schunk Powercube Pan-Tilt head

Sensors

- Microsoft Kinect 3D camera

- one Hokuyo UTM-30LX laser scanner mounted on a Powercube tilting module for 3D laser scans (shoulder scanner),

- two Hokuyo URG-04LX laser scanners for navigation and localization

- a Swiss-Ranger SR4000 Time-of-flight camera,

- two SVS-Vistek eco274CVGE 2Mpixel gigabit-Ethernet cameras forming a stereo setup,

- FLIR thermal camera,

- fingertip sensors for slip, and proximity detection.

Software

All the hardware is integrated in the Robot Operating System and (partly) in YARP. The code can be found in the tum-ros-pkg repository (http://tum-ros-pkg.sourceforge.net/). Notable software components are grouped into stacks and include:

- (ROS) a perception pipeline using the laser data that can generate (amongst others) a 3D semantic map of the robot's environment (stack: http://tum-ros-pkg.svn.sourceforge.net/viewvc/tum-ros-pkg/mapping/),

- (ROS) a perception architecture which integrates time-of-flight and camera data to perceive objects. (stacks: http://tum-ros-pkg.svn.sourceforge.net/viewvc/tum-ros-pkg/mapping/, http://tum-ros-pkg.svn.sourceforge.net/viewvc/tum-ros-pkg/perception/),

- (ROS) a plan-based controller which invokes and monitors perception and actions (stacks: http://www.ros.org/wiki/cram, http://tum-ros-pkg.svn.sourceforge.net/viewvc/tum-ros-pkg/highlevel/),

- (ROS/YARP) arm control using a vector-field controller (providing basic obstacle avoidance),

- (ROS) a simple-grasp planner for one-handed grasps and unknown objects, which takes into account object form, obstacles (as perceived by the TOF-camera) as well as the feedback from the hand torque sensors.

- (ROS) localization and navigation, using ROS components (stack: navigation)

- (ROS) a simulation environment of our robot in Gazebo. (the hands are work in progress) (stacks: simulation, robot_model),

- (ROS) knowledge processing system particularly designed for autonomous personal robots. It provides specific mechanisms and tools for action-centered representation, for the automated acquisition of grounded concepts through observation and experience, for reasoning about and managing uncertainty, and for fast inference (stack:http://tum-ros-pkg.svn.sourceforge.net/viewvc/tum-ros-pkg/knowledge/).

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: