Research

My two main areas of work are knowledge acquisition, representation and reasoning techniques for autonomous robots, and knowledge-based methods for interpreting observations of human everyday activities.

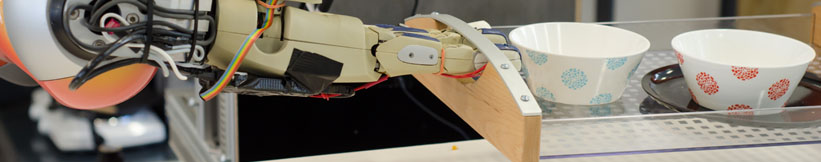

Knowledge processing for autonomous robots

In my work on knowledge representation for robots, I investigate how robots can be equipped with formally represented knowledge about actions, objects, environments, events, processes, etc. Such knowledge is needed to competently perform complex manipulation tasks. A special focus is on modern knowledge processing techniques that make use of information from the Web that has originally been created for humans, and that also use the Internet for exchanging knowledge between robots.

In this context, I am involved in the following projects:

- RoboHow: In the RoboHow project, I am working on methods for representing actions to be performed by the robot, as well as actions observed from humans.

- RoboEarth: In this project, I am working on the definition and implementation of the representation language that is used for exchanging knowledge about actions, objects and environment models between robots.

- CoTeSys: The work in the CoTeSys Cluster of Excellence focussed on the development of general knowledge processing techniques that can be used on and by autonomous robots.

- PR2 Beta Program: In the PR2 Beta Program, the IAS group has been awarded a PR2 robot to further develop the CRAM software library. My part in this project is the development of the KnowRob knowledge base.

Models of Human Everyday Activities

In this line of research, I investigate how models everyday tasks like table setting and meal preparation can be constructed by combining observational data (mainly motion capture data) with formally represented knowledge about these activities. The objectives are, on the one hand, to gain a deeper understanding of how humans perform these tasks in order to transfer this knowledge to robots, and on the other hand, to evaluate and assess the task execution, e.g. to model and recognize effects caused by illnesses and disabilities.

In this context, I am involved in the following projects:

- CogWatch: This project investigates methods for recognizing motion disorders related to Apraxia, and ways to remind patients of which actions to perform in which way. Our task is the modeling and recognition of the actions and to build expressive, semantic action representations from observation.

Prof. Dr. hc. Michael Beetz PhD

Head of Institute

Contact via

Andrea Cowley

assistant to Prof. Beetz

ai-office@cs.uni-bremen.de

Discover our VRB for innovative and interactive research

Memberships and associations: